|

I am a Ph.D. student in Computer Science at Stanford University, advised by Chelsea Finn and Percy Liang. I am also a research scientist intern in the Deep Imagination Research team at NVIDIA. I conduct research in AI and robotics. Previously, I graduated summa cum laude from UCLA with a bachelor's degree in Computer Science and received a master's degree in Computer Science from Stanford. At Stanford, I was thankfully one of five students in my graduating class generously supported by the Siebel Scholarship. I am passionate about teaching and mentoring students and have served as the Head TA for CS231N in Spring 2022 and as a research mentor for LINXS (a Stanford CS diversity outreach program) in Summer 2023. I also founded Deep Learning Portal, which is an AI mentorship and outreach program that provides high-quality, personalized guidance to disadvantaged college students so that they can learn deep learning fundamentals quickly. Google Scholar / Twitter / LinkedIn / GitHub |

|

ResearchI am currently interested in leveraging multimodal foundation models to learn high-performing, generalizable policies in robotics. |

|

|

Fine-Tuning Vision-Language-Action Models: Optimizing Speed and Success

Moo Jin Kim, Chelsea Finn, Percy Liang RSS 2025 project website / arXiv / code / models We introduce an Optimized Fine-Tuning (OFT) recipe for vision-language-action models that enables the 7B OpenVLA model to generate actions 25-50x faster and perform language-driven bimanual manipulation tasks on a new robot with enhanced success rates. OpenVLA-OFT achieves state-of-the-art 97.1% average success rate on the LIBERO simulation benchmark and also outperforms π0, RDT-1B, Diffusion Policy, and ACT in our real-world ALOHA robot experiments. |

|

|

OpenVLA: An Open-Source Vision-Language-Action Model

Moo Jin Kim*, Karl Pertsch*, Siddharth Karamcheti*, Ted Xiao, Ashwin Balakrishna, Suraj Nair, Rafael Rafailov, Ethan Foster, Grace Lam, Pannag Sanketi, Quan Vuong, Thomas Kollar, Benjamin Burchfiel, Russ Tedrake, Dorsa Sadigh, Sergey Levine, Percy Liang, Chelsea Finn CoRL 2024 (Outstanding Paper Award Finalist: Top 6 papers) project website / arXiv / code / models * co-first authorship We introduce OpenVLA, a 7B-parameter open-source vision-language-action model (VLA), pretrained on 970k robot episodes from the Open X-Embodiment dataset. OpenVLA sets a new state of the art for generalist robot manipulation policies. It supports controlling multiple robots out of the box and can be quickly adapted to new robot setups via parameter-efficient fine-tuning. OpenVLA models, code, and training data are fully open-source. |

|

|

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Open X-Embodiment Collaboration, ... , Moo Jin Kim, ... IEEE ICRA 2024 (Best Conference Paper Award) project website / paper / blog post / data authors listed in alphabetical order; project led by Quan Vuong and Karl Pertsch; Stanford evaluations led by Moo Jin Kim and Max Du We introduce the Open X-Embodiment Dataset, the largest robot learning dataset to date with 1M+ real robot trajectories, spanning 22 robot embodiments. We train large, Transformer-based policies on the dataset (RT-1-X, RT-2-X) and show that co-training with our diverse dataset substantially improves performance. |

|

|

BridgeData V2: A Dataset for Robot Learning at Scale

Homer Walke, Kevin Black, Abraham Lee, Moo Jin Kim, Max Du, Chongyi Zheng, Tony Zhao, Philippe Hansen-Estruch, Quan Vuong, Andre He, Vivek Myers, Kuan Fang, Chelsea Finn, Sergey Levine CoRL 2023 project website / arXiv We introduce BridgeData V2, a large and diverse dataset of robotic manipulation behaviors designed to facilitate research on scalable robot learning. BridgeData V2 contains 60,096 trajectories collected across 24 environments on a publicly available low-cost robot. |

|

|

NeRF in the Palm of Your Hand: Corrective Augmentation for Robotics via Novel-View Synthesis

Allan Zhou*, Moo Jin Kim*, Lirui Wang, Pete Florence, Chelsea Finn CVPR 2023 project website / arXiv * co-first authorship We leverage neural radiance fields (NeRFs) to generate perturbed end-effector wrist camera viewpoints while simultaneously calculating corrective actions in order to improve absolute success rates of 6-DoF robotic grasping policies by 22.5% on average. |

|

|

Giving Robots a Hand: Learning Generalizable Manipulation with Eye-in-Hand Human Video Demonstrations

Moo Jin Kim, Jiajun Wu, Chelsea Finn NeurIPS Deep Reinforcement Learning Workshop 2022 project website / arXiv We augment narrow robotic imitation datasets with broad unlabeled human video demonstrations to greatly enhance the generalization of eye-in-hand visuomotor policies. Although a clear visual domain gap exists between human and robot data, our framework does not need to employ any explicit domain adaptation method and enables robots to generalize to both new environment configurations and new tasks that are unseen in the robot demonstration data. |

|

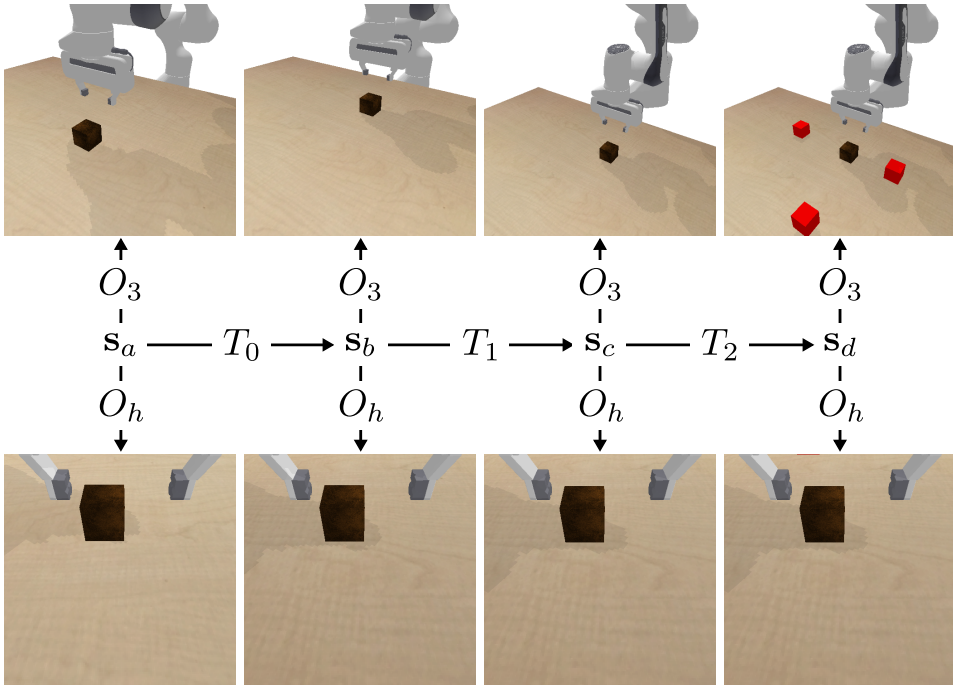

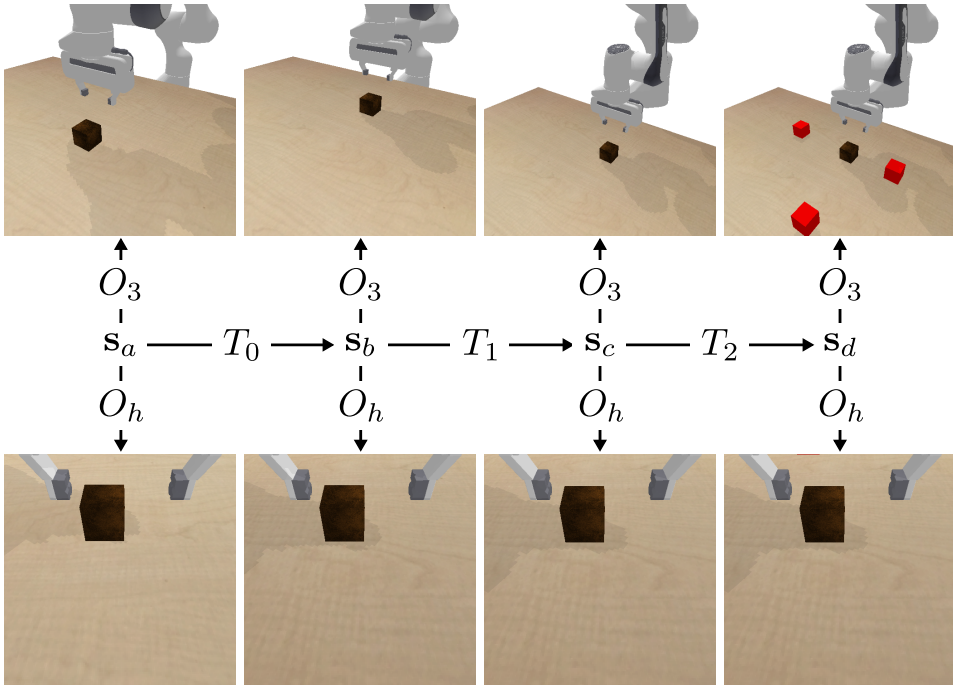

Vision-Based Manipulators Need to Also See from Their Hands

Kyle Hsu*, Moo Jin Kim*, Rafael Rafailov, Jiajun Wu, Chelsea Finn ICLR 2022 (Oral Presentation: Top 1.6% of submissions) project website / arXiv / code * co-first authorship; order determined by coin flip We conduct extensive experiments to show that utilizing a hand-centric (eye-in-hand) visual perspective consistently improves training efficiency and out-of-distribution generalization in vision-based robotic manipulation. These benefits hold across a variety of learning algorithms, experimental settings, and distribution shifts. When hand-centric observability is not sufficient, we propose a simple yet effective approach to incorporate a third-person information stream while regularizing it via a variational information bottleneck to prevent overfitting. |